While the tech bros of the world declare the singularity – the moment where artificial intelligence (AI) surpasses human intelligence – imminent, various AI systems are still struggling with tasks humans can perform with ease.

For instance, image generators struggle with hands, teeth, or a glass of wine that is full to the brim, while large language model (LLM) chatbots are easily vexed by problems that can be solved by most 8-year-old humans. As well as this, they are still prone to “hallucinations”, or serving up plausible-sounding lies rather than true information.

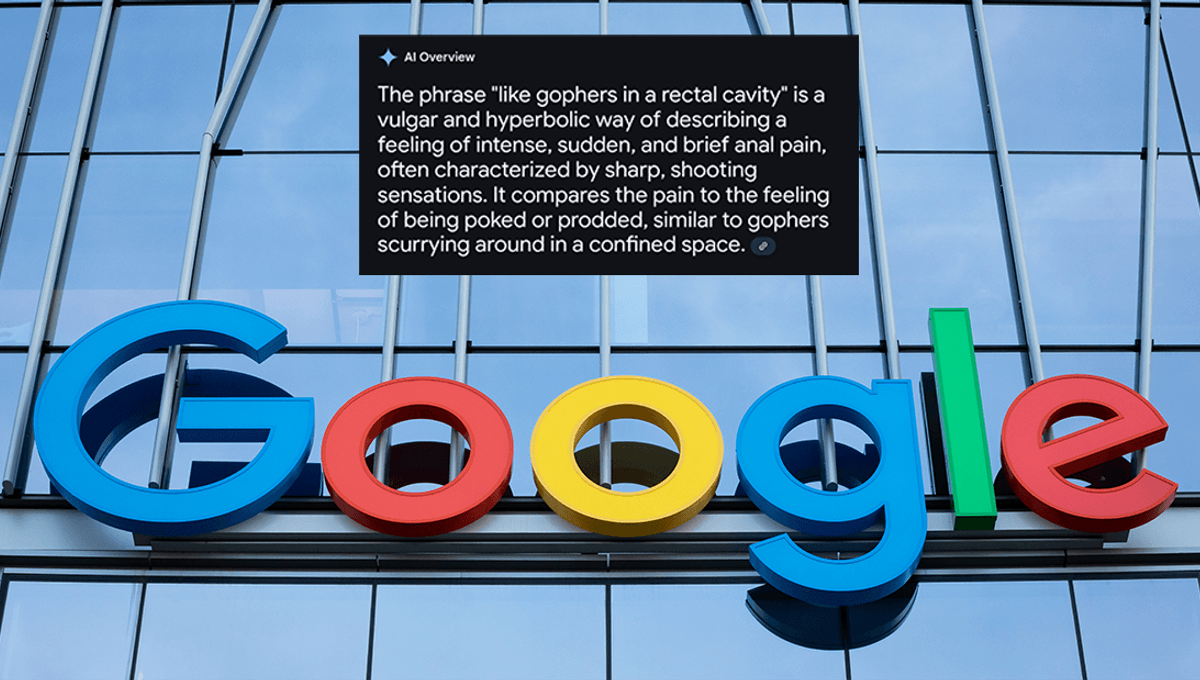

Despite these problems, search and other tech giants have been eager to implement AI into their various products. In the latest in a long line of issues, Internet users have discovered Google’s AI summary will spout some plausible-sounding nonsense if you add the word “meaning” to the end of your search.

Other people tried the same technique, with similar results.

“The phrase ‘rainbow trout in a chocolate volcano’ is a metaphorical way of describing a situation or a person’s state, often used to highlight a surprising or unexpected combination,” Google told one X user. “It implies a juxtaposition of seemingly contrasting elements: the freshness of rainbow trout with the sweetness and richness of a chocolate volcano.”

“‘Stick it to the diddly time’ is a slang expression meaning to resist authority or a system, or to refuse to conform to expectations,” it told a Redditor. “It’s a playful and defiant way of saying you’re not going to put up with something, or you’re going to do things your own way. The phrase ‘diddly time’ itself is a nonsensical phrase that adds to the playful, rebellious tone.”

While people may have already begun outsourcing their own critical thinking to AI, or relying on it for information, these chatbots are not really doing any factchecking. What they do is put words in a pleasing order, based on their data training set. They are more “spicy autocomplete” than SkyNet or Optimus Prime.

When they cannot come up with a truthful answer, really taken by smushing together answers from humans in their dataset, they are prone to “hallucination” in their attempt to please their human users. Or in simple terms, they will sometimes talk crap at you rather than provide you with no answer at all.

That’s not ideal for a service like Google, whose whole schtick has been to provide information to people seeking information. However, the issue currently appears to have been temporarily patched, with AI overview turned off whenever you type in an uncommon or made up phrase followed by the word “meaning”.

Source Link: Adding One Word To Searches Makes Google's AI Spout Pure, Unfiltered Nonsense