If there’s one thing mathematicians love, it’s being surprised. Bonus points if it comes in the form of something that looks nice and ties up a problem thought to be a lost cause.

So you can imagine it must have been a good day for Alexander Dunn and Maksym Radziwiłł when, on September 15 last year, they were able to finally upload a proof of Patterson’s Conjecture – a 45-year-old proposed solution to a problem that stretches all the way back to the 19th century.

“It was exciting to work on, but extremely high risk,” Dunn told Quanta Magazine. “I mean, I remember coming to my office at, like, 5 a.m. every morning straight for four or five months.”

What kind of problem could be worth such commitment? Two words: Gauss sums.

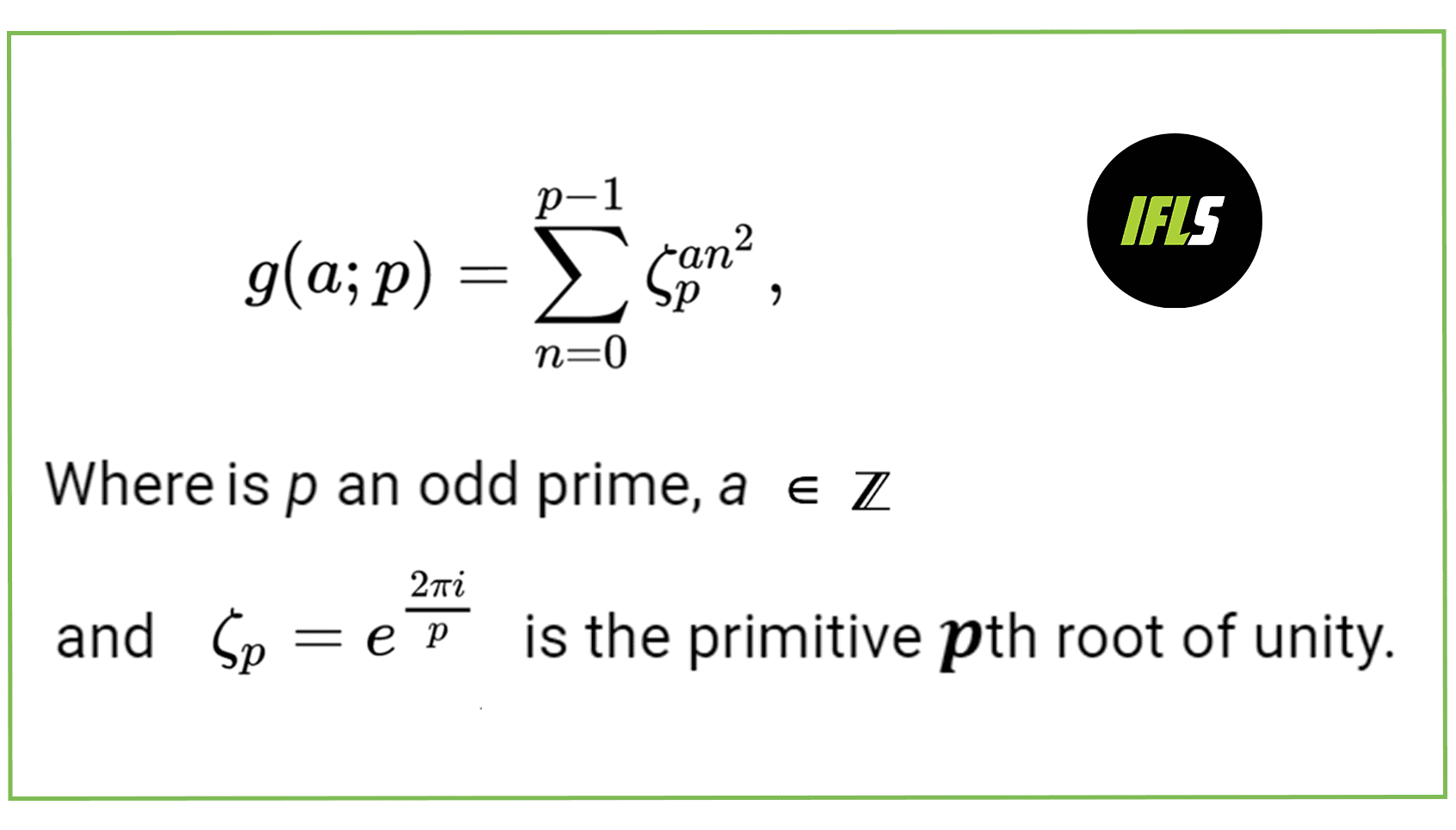

If you’re familiar with these quantities – named after Carl Friedrich Gauss, one of the most prolific mathematicians in history and the guy who first started playing around with the sums back in the 18th century – then you probably have some background in number theory, where they’re ubiquitous. Put simply, or as simply as things get in college-level math, they’re sums of roots of unity: you take all numbers which, say, cube to get one, and add them all together. In their quadratic form, they look like this:

Image credit: (C) IFLScience

So far so good, but the real problem starts when you move up a level – from quadratic to cubic. It’s a tiny change in one respect: all we’re doing is replacing the n2 with an n3 in the sum above. But the effect is pretty massive – which is why it took 175 years after Ernst Eduard Kummer originally began studying them for the case to be cracked.

Kummer’s Conjecture

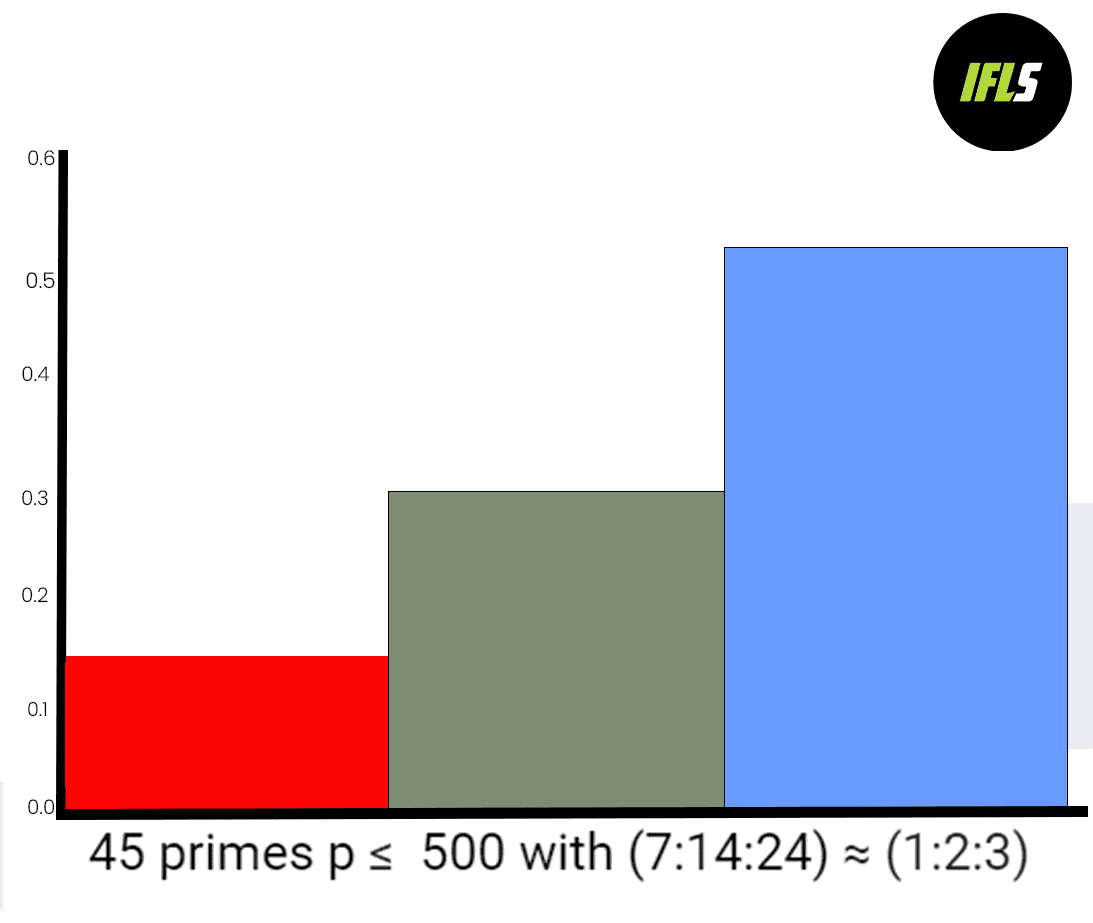

That’s not to say Kummer made no headway. For about a century, his 1846 conjecture was the closest thing the world had to an answer for the question of how the values found from cubic Gauss sums are distributed along the number line. And it was a brilliantly mathematically weird answer, too: he conjectured that for a prime number p, the results of these cubic Gauss sums could be split up in a very specific way, with half lying between √p and 2√p, a third between −√p and √p, and the final sixth being between −2√p and −√p.

Image credit: (C) IFLScience

He had churned through, by hand, cubic Gauss sums corresponding to the first 45 non-trivial prime numbers, and the conjecture looked good. But he couldn’t prove it – nobody could. In fact, it took until the early 1950s, with the dawn of the computer age, for mathematicians to think about taking it on again.

When they finally did, they found something unexpected. Kummer had been completely wrong.

“The calculation involved about 15 million multiplications counting the checking mentioned above. The values of p were introduced in blocks of 200. The entire calculation was carried out twice to ensure reliability,” wrote John Von Neumann and Herman Goldstine in their 1953 paper on Kummer’s Conjecture.

They had chosen the problem as a neat way to test a new toy they’d been allowed to play with: the first programmable, electronic, general-purpose digital computer, built in 1945, called ENIAC – verifying Kummer would just be a bonus. With the help of this digital brain, they – along with physicist and programmer Hedvig Selberg – managed to slightly improve on Kummer’s 45 sums, calculating the results for primes less than a whopping 10,000.

And as the number went up, the pattern disappeared. “[The] results would seem to indicate a significant departure from the conjectured densities and a trend towards randomness,” wrote Von Neumann and Goldstine.

Patterson’s Conjecture

But a thousand or so examples do not a proof make, and it would take another decade and a half before Kummer’s Conjecture was conclusively falsified. The pair of mathematicians responsible – a number theorist named Samuel Patterson and his grad student, Roger Heath-Brown – had shown that cubic Gauss sums were indeed distributed equally along the number line. Sort of.

What does that mean? Patterson had previously approached the problem slightly differently from his predecessors: he decided to see what would happen if you summed the values of the cubic Gauss sums. A set of X Gauss sums, he found, would sum to about X5/6 – that is, more than the square root of X, but less than X itself.

That told him something important. It had already been shown by earlier mathematicians that a set of truly random results would sum to about ±√X. So to find a total of X5/6 meant that the sums were basically random, but with a small extra factor making them slightly more likely to be positive than negative.

If this was what was going on, it would explain everything – why Kummer’s results seemed so non-random, and why randomness increased with the number of primes being calculated. It’s a problem of asymptotes: at smaller scales, that extra factor is strong enough to impact the results in a noticeable way, but as X gets larger and larger, it overwhelms everything else, and all you see is randomness.

There was just one problem: he couldn’t prove it. Patterson’s Conjecture, as the X5/6 result became known, had replaced Kummer’s Conjecture – but the main question was still open.

But number theorists are perhaps uniquely single-minded, and Heath-Brown continued working on the problem for more than two decades. In 2000, he published a paper describing a new sieve method – a term mathematicians use to describe algorithms that work through the process of elimination – which he believed could be used to finally prove Patterson’s Conjecture.

He even sketched out a likely method to improve the sieve – make it sharper, more precise. Good enough to finally solve the now 150-year-old problem, or so he conjectured. But still, nobody could figure out how to do it – and now we know why.

Proving Patterson’s Conjecture

“We were able to prove that 1 = 2, after very, very complicated work,” Radziwiłł told Quanta. “I was kind of convinced that we basically have an error in our proof.”

They had made no mistake, though: Heath-Brown, like Kummer before him, had been proven wrong. His “improved” sieve was no such thing – and if Radziwiłł and Dunn were going to solve Patterson’s conjecture, they would need to go back to the original cubic large sieve.

“I think that was the main reason why nobody [solved Patterson’s Conjecture], because this [Heath-Brown] conjecture was misleading everybody,” Radziwiłł told Quanta. “I think if I told Heath-Brown that his conjecture is wrong, then he probably would figure out how to do it.”

So, knowing where previous attempts had stumbled, Radziwiłł and Dunn made history: their paper finally puts to bed a problem that’s been pestering number theorists for the best part of two centuries. And there’s just one teeny problem left to solve before it’s entirely, completely proven beyond a shadow of a doubt.

“An important ingredient in our proof is a dispersion estimate for cubic Gauss sums,” the pair note in their paper. “This estimate relies on the Generalized Riemann Hypothesis, and is one of the fundamental reasons why our result is conditional.”

So all we need now is a proof of the Riemann Hypothesis, and everything is settled. Easy-peasy, right?

Source Link: After 175 Years, Two False Conjectures, And The Birth Of Computing, This Theorem Finally Has A Proof