On the surface, it may not appear that Dr Dolittle and artificial intelligence (AI) have much in common. One belongs in 1900s children’s literature, while the other is firmly rooted in the 21st century. One is a physician turned vet who can talk to animals, and the other a computerized technology that cannot. Unless…

AI has already given us the ability to bark instructions at robots like Siri and Alexa – could its potential be extended to the animal kingdom? Could it help us decipher some of the mysteries of the natural world and maybe one day allow us to “talk” to animals?

There are certainly some who think so. And some progress has already been made in attempting to decode animal communication using AI. It might be a way off allowing you to dish with your dog or spill the tea with your tortoise, but technology has – and hopefully will continue to – improve our understanding of other species and how they interact. When it comes to communicating with animals, perhaps Dolittle walked (and talked) so that AI could run.

Do animals use language?

The first hurdle in “translating” animal communication is understanding what that communication looks like. Human language is made up of verbal and non-verbal cues, and animal communication is no different.

Dogs wag their tails, for example, to convey a range of emotions. Bees dance to let other bees know where to find a good source of nectar or pollen. Dolphins use clicks and whistles to relay information.

However, there is some debate as to whether this can be considered a “language”. A debate which, according to Dr Denise Herzing, Research Director, Wild Dolphin Project, AI could help put to bed.

“We currently don’t know if animals have a language,” Herzing told IFLScience. “[But] AI can help us look for language-like structures which might suggest animals have parts of a language.”

How can AI “translate” animal communication?

“Bioacoustics research has shown that animal vocalisations carry many types of information, from their identity to their status, internal state, and sometimes external objects or events,” Elodie F. Briefer, Associate Professor in Animal Behaviour and Communication at the University of Copenhagen, told IFLScience. “All of these could be picked up by AI.”

More specifically, by machine learning. This is a form of AI that can analyze data without needing to follow specific instructions. In theory, it could be used to process recordings of animal communication and build language models based on these recordings.

“Machine learning is a powerful tool as it can be trained to identify patterns in very large datasets, so it could allow us to process large amounts of data and gain crucial knowledge about how information contained in animal sounds changes over time, etc,” Briefer added.

It’s the same technology we use every day to power predictive text, Google translate, and voice assistants. Turning it to animal communication may prove more challenging, but that hasn’t stopped researchers from trying.

“There are many different techniques and ways to approach the science,” Herzing told IFLScience. These will differ, she added, “[depending] on the data, the AI technique, or even the understanding of the animals themselves.”

The Earth Species Project, for example, is a nonprofit “dedicated to decoding non-human language”. Their focus so far has been on cetaceans and primates, but will, they say, eventually stretch to other animals, including corvids.

The project uses a machine learning technique, which treats a language as a shape, “like a galaxy where each star is a word and the distance and direction between stars encodes relational meaning.” These can then be “translated by matching their structures to each other”.

Optimistically, Britt Selvitelle, cofounder of the Earth Species Project, believes the approach could help decode the first non-human language within the next decade, according to The New Yorker. Others, however, are more skeptical of AI as a tool for unraveling animal communication.

It’s all well and good analyzing recordings, but it’s meaningless without context, says Julia Fischer at the German Primate Center in Göttingen. “[AI] is not a magic wand that gives you an answer to the biological questions or questions of meaning,” she told New Scientist.

It’s still vital to look to nature and correlate recordings with real-world observations, and that is no mean feat.

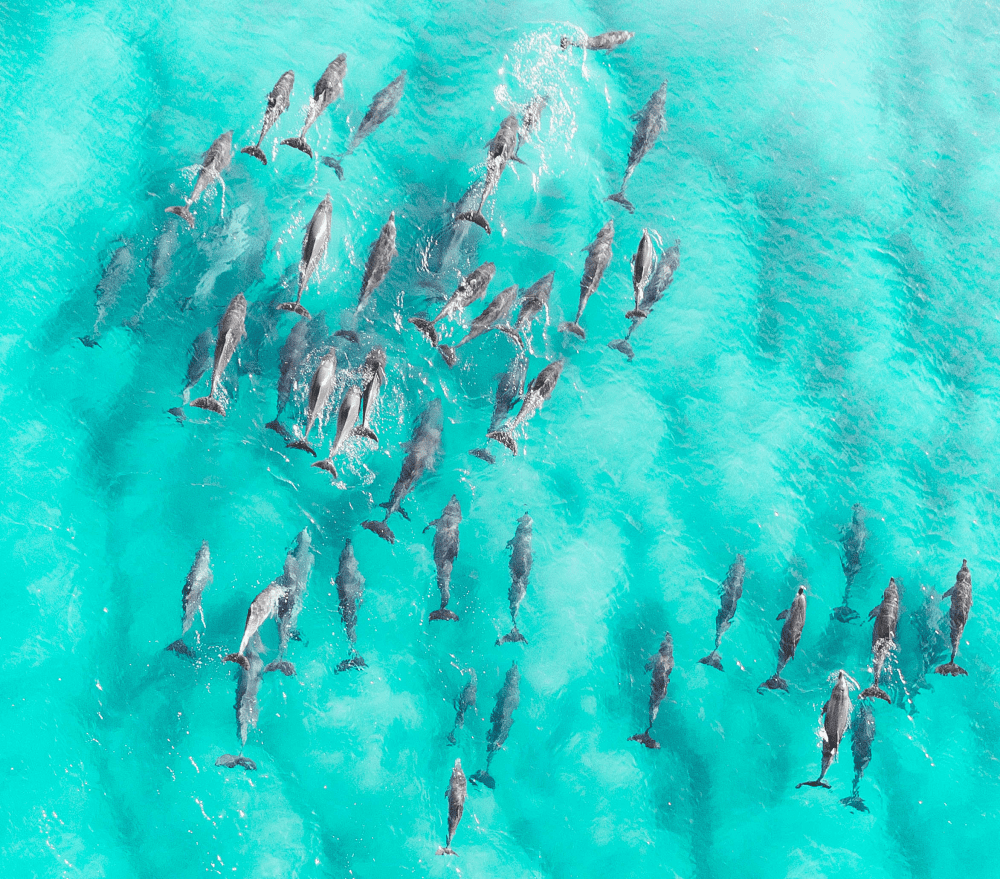

As highly social animals, cetaceans are a good starting point for trying to chat to animals. Image credit: F Photography R / Shutterstock.com

What has been achieved so far?

Lots of projects are currently working on unraveling the secrets of animal communication with the help of AI, the Earth Species Project being one of them. Last December, the project published a paper that claims to have solved the “cocktail party problem” – the issue that arises when distinguishing the source of a sound from multiple simultaneous sounds.

Imagine a cocktail party, if you will. Among the chatter and background noise, it’s almost impossible to figure out who exactly the calls for another espresso martini are coming from. And the same issue is present when deciphering animal communication.

In the study, the researchers describe an experimental algorithm – which they applied to species including macaques, bottlenose dolphins, and Egyptian fruit bats – that has allowed them to pinpoint which individual in a raucous group of animals is “talking”.

AI is cementing itself as a valuable tool in other areas of zoology too. “[It] has been used notably in a relatively new field termed ‘ecoacoustics’, which monitors biodiversity through passive acoustic monitoring and requires very large datasets,” Briefer told IFLScience.

“People have also used it to extract information from long-term recordings (e.g. identifying marine mammals from underwater recordings). More recently, it has been used to identify patterns in other contexts as well, such as to identify underlying emotions in [pigs and chickens].”

Briefer’s work includes one such study. She and coauthors trained an AI system to recognize positive or negative emotions in the grunts, squeals, and oinks of pigs.

In rodents, software called DeepSqueak has been used to judge if an animal is experiencing stress based on its ultrasonic calls. These sounds, imperceptible to the human ear, are how rodents socially communicate. The software has also been used on primates and dolphins to help researchers automatically label recordings of animals’ calls.

AI is trying to label primate calls. Image credit: Gudkov Andrey / Shutterstock.com

The nonprofit Wild Dolphin Project, founded by Herzing, aims to use AI to discover patterns in dolphin calls and explore communication between dolphins and humans. In 2013, after teaching a pod of dolphins to associate a particular whistle with a type of seaweed, researchers used a machine-learning algorithm to identify and translate the sound in the wild.

Meanwhile, Project CETI (Cetacean Translation Initiative) is attempting to decode the communication of sperm whales by using language models to decrypt their songs and establish their “language”.

Why these animals?

No species is superior when it comes to decoding communication, Briefer believes, but still, some have been targeted by researchers more than others.

“When considering acoustic communication, of course the most interesting ones are those that are very vocal (e.g. birds, pigs, meerkats, etc.) and those that have a large sound repertoire,” Briefer told IFLScience.

Equally, social animals, such as primates, whales, and dolphins, are more likely to have well-developed communication systems, making them ideal for study.

“Dolphins live in highly social societies, live long lives, and have long memories, suggesting that they have complex relationships to communicate about,” Herzing explained. Intelligence may also play a part.

“Cetaceans, or at least dolphins, are known to have a high EQ [emotional intelligence], abilities to learn artificial languages, understand abstract ideas, and to recognize themselves in a mirror,” Herzing added. “These are some of the bedrocks of intelligence.”

What are the benefits of understanding animal communication?

Aside from the obvious – finally finding out what your cat really thinks of you – there are lots of ways that an improved understanding of animal communication can be beneficial, for humans and animals alike.

“For both captive and wild species, it allows us to understand them better, and know when they are thriving or suffering,” Briefer told IFLScience. “This is crucial for the species we have around us (pets and farm animals for example), as their welfare depends on us.”

Not only could this make us better pet owners, but it has the potential to forever change our relationship with all animals. “Knowing that animals have language would hopefully make humans understand that we are not the only sentient species on the planet,” Herzing added.

At the very least, this could inspire more sympathy toward other species and lead us to rethink the way that we treat them. This could have far-reaching implications, including for the usage of animals in sport, entertainment, and research.

It could even trigger a complete overhaul of animal agriculture. With a better understanding of the animals around us, can we still justify practices that exploit and kill them? From a human perspective, there is also a lot we could learn, not just about animals but about ourselves and, maybe, other life forms.

Understanding animal communication could also teach us about the evolution of language, Briefer told IFLScience. “The tools we develop with species on earth, might apply to far away worlds should we encounter any other life forms,” Herzing speculated, adding that these tools could help us gauge their intelligence and whether we could communicate with them.

Making the leap from animal to alien communication is certainly a move Dolittle never made. Should the fictional vet be worried about being bested? Not just yet. Using AI to actually talk to animals, let alone extraterrestrials, is a big leap. But it certainly has the potential to improve our understanding of other species and the world around us. In fact, it already has. Perhaps Dolittle should start feeling a little nervous after all.

This article first appeared in issue 3 of CURIOUS, IFLScience’s e-magazine. Subscribe now to receive each issue for free, delivered directly to your inbox.

Source Link: Can AI Help Us Talk To Animals?