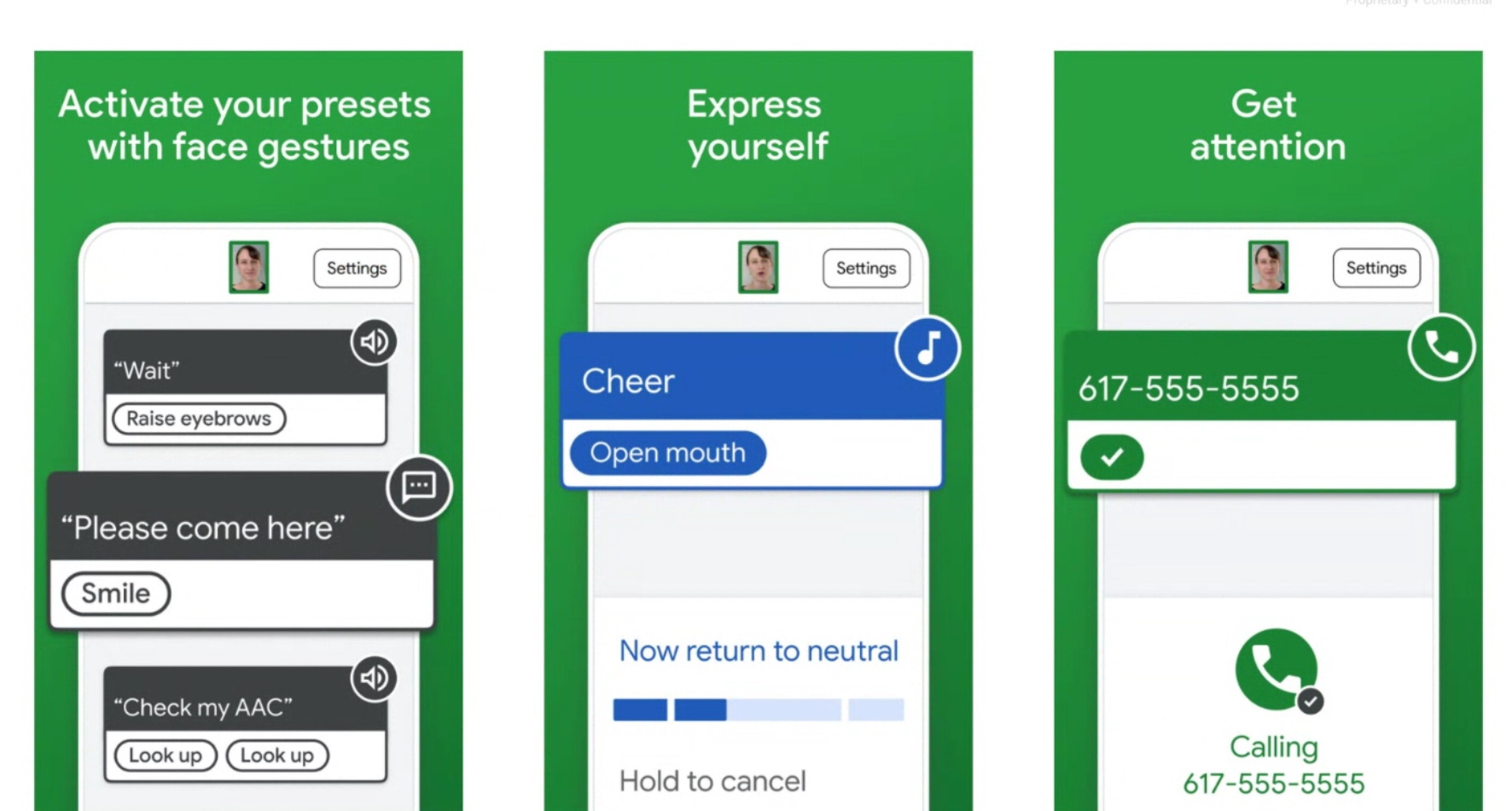

Making smartphones more accessible is always a good idea, and Google’s latest features bring quick actions and navigation to people whose expressions are their primary means of interacting with the world. Project Activate and Camera Switches let users perform tasks like speaking a custom phrase, or navigating using a switch interface, through facial gestures alone.

The new features rely on the smartphone’s front-facing camera, which can watch the user’s face in real time for one of six expressions: a smile, raised eyebrows, opened mouth, and looking left, right, or up. It relies entirely on local computing and no image data is saved, nor is it doing what is generally understood as “facial recognition” — this type of machine learning can focus specifically on, for example, identifying the eyebrows and sending a signal whenever they move past a certain, customizable threshold.

Each expression can be assigned a different role. Camera Switches integrates with Android’s existing switch compatibility, by which people who use assistive tech like a joystick or blow tube can navigate the phone’s OS. Now that can be done without any peripheral device at all, and users can pick various facial gestures for iterating through selections, confirming a choice, backing out, and so on.

Using Project Activate, expressions can be tied to self-contained actions like speaking a phrase. Many people with disabilities rely on caretakers for a variety of reasons, but one thing you can’t ask a caretaker to do is get your caretaker’s attention! So one useful application might be assigning (say) an extended eyebrow raise to have the device speak the phrase “hey!” or “I need help with something,” or “thanks!”

The gestures can also be used to play an audio file, or text or call a predetermined number. More expressions and more capabilities are on the way, as well as more languages — of course faces don’t have languages, but the app and support documentation do, so Project Activate will start with English-speaking countries and move out from there. Camera Switches, on the other hand, will be available in 80 languages from the start.

Note that these can’t both be used at once, since they both require access to the camera and expression recognition thing. So users should make sure they have a backup method for navigation. Both should run on pretty much any Android phone from the last five years or so.

Lastly, an update to Google’s Lookout app, which reads labels for people with visual impairments, adds the ability to scan and read out handwritten content the way it can with printed things. That’s useful for sticky notes, “gone fishing” type signs on the doors of stores, and things like greeting cards with notes from the sender. The app has seen big increases in usage over the last year so they’re building it out. (Support for identifying euro and Indian rupee banknotes should help fuel that growth too.)

All the new stuff should be available later this week free of charge.

Source Link Google powers up assistive tech in Android with facial gesture-powered shortcuts and switches

Leave a Reply