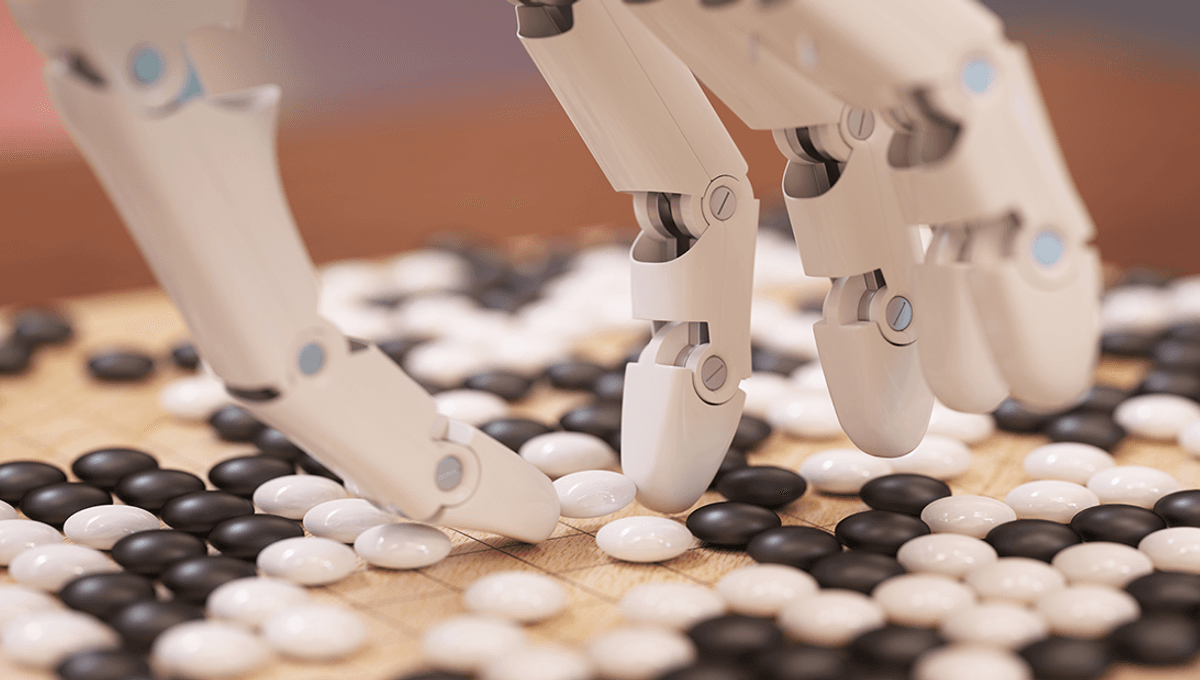

The top player in the world at the board game Go is a machine-learning algorithm that taught itself how to play. In fact, Google’s AlphaGo Zero taught itself how to become world champion of the game in just three days, just to really rub it in to its professional human competitors, who spent years honing their game only to be beaten by the bot.

The bot can even beat the previous version of itself, which could beat world champions.

“It defeated the version of AlphaGo that won against the world champion Lee Sedol, and it beat that version of AlphaGo by hundred games to zero,” the lead researcher for AlphaGo explained in a 2017 video.

Well, now humans have pulled one back against the machines. One Go player, who is placed one level below the top amateur ranking according to the Financial Times, was able to beat AI player KataGo in 14 out of 15 games.

How did humans achieve this comeback? Well, with a little help from, uh, machine-learning. A group of researchers, who published a preprint of their research, trained their own AI “adversaries” to search for weaknesses in KataGo.

“Notably, our adversaries do not win by learning to play Go better than KataGo – in fact, our adversaries are easily beaten by human amateurs,” the team wrote in their paper. “Instead, our adversaries win by tricking KataGo into making serious blunders.”

“This result suggests that even highly capable agents can harbor serious vulnerabilities,” they added.

The exploit the algorithm found was to attempt to create a large loop of stones around the AI victim’s stones, but then “distract” the AI by placing pieces in other areas of the board. The computer fails to pick up on the strategy, and loses 97-99 percent of the time, depending on which version of KataGo is used.

The strategy it developed was then used by Kellin Pelrine, an author on the paper, to beat the computer itself consistently. No further help was needed from AI once Pelrine had learned the strategy.

While it’s great that we showed SkyNet we’ve still got it (even if we did get a little help from a Terminator) the team says that the research has bigger implications.

“Our results underscore that improvements in capabilities do not always translate into adequate robustness,” the team concluded. “Failures in Go AI systems are entertaining, but similar failures in safety-critical systems like automated financial trading or autonomous vehicles could have dire consequences.”

A preprint of the study has been published on the researchers’ website.

[H/T: Financial Times]

Source Link: Human Beats AI In 14 Out Of 15 Go Games By Tricking It Into Serious Blunder