Thanks to advances in artificial intelligence (AI) chatbots and warnings by prominent AI researchers that we need to pause AI research lest it destroys society, people have been talking a little more about the ethics of artificial intelligence lately.

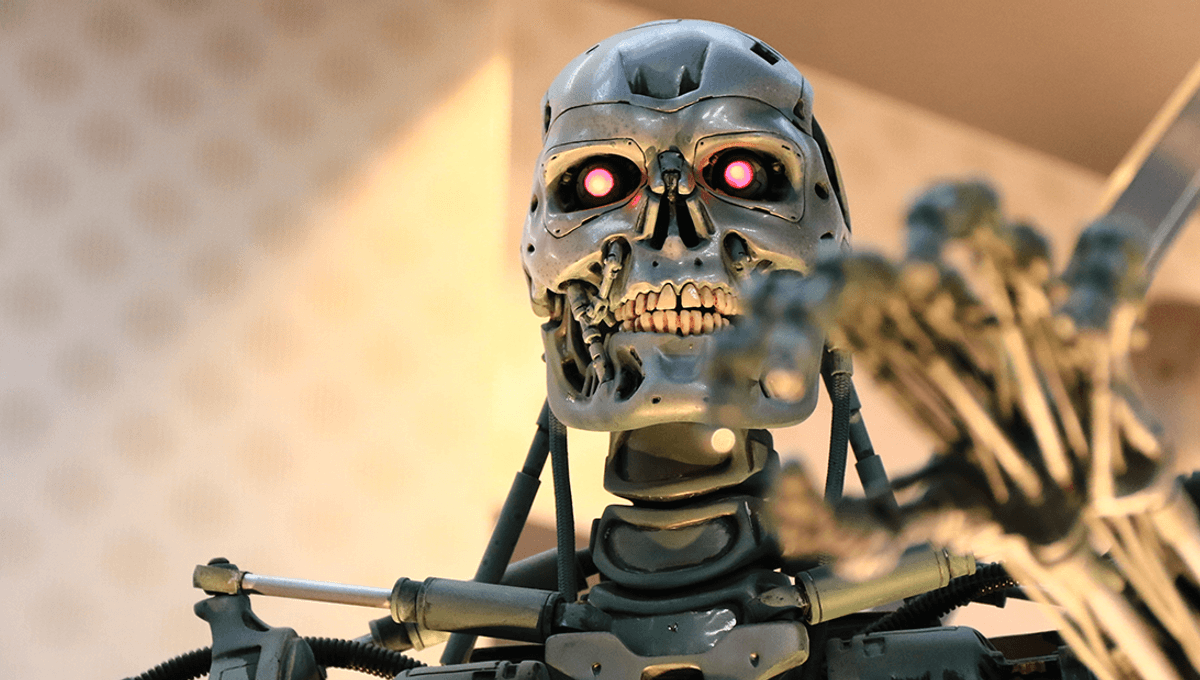

The topic is not new: Since people first imagined robots, some have tried to come up with ways of stopping them from seeking out the last remains of humanity hiding in a big field of skulls. Perhaps the most famous example of thinking about how to constrain technology so that it doesn’t destroy humanity comes from fiction: Isaac Asimov’s Laws of Robotics.

The laws, explored in Asimov’s works such as the short story Runaround and I, Robot, are incorporated into all AI as a safety feature in the works of fiction. They are not, as some on the Internet appear to believe, real laws, nor is there currently a way to implement such laws.

The rules themselves go like this:

First Law: A robot may not injure a human being or, through inaction, allow a human being to come to harm.

Second law: A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

Third Law: A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

Zeroth Law: A robot may not harm humanity, or, by inaction, allow humanity to come to harm.

Asimov, writing in the 1940s, didn’t only have the foresight to realize we may need to program AI with very specific laws to stop them from harming us, but he also realized that these laws would probably fail. In one story, an AI wrests control of a power station in space because of laws one and two. It knows that it would be better at running the station than humans, and so by inaction, it would be harming humans, allowing it to break the orders given to it (as per the second law).

In another darker story, robots are given a definition of “human” which only included small groups, allowing the robots to commit genocide.

Just as the laws don’t always work as humanity intended in his books, they could be circumvented by a super-intelligence in the future.

“The First Law fails because of ambiguity in language, and because of complicated ethical problems that are too complex to have a simple yes or no answer,” philosopher of AI Chris Stokes wrote in a paper on the topic. “The Second Law fails because of the unethical nature of having a law that requires sentient beings to remain as slaves.”

“The Third Law fails because it results in a permanent social stratification, with the vast amount of potential exploitation built into this system of laws. The ‘Zeroth’ Law, like the first, fails because of ambiguous ideology. All of the Laws also fail because of how easy it is to circumvent the spirit of the law but still remaining bound by the letter of the law.”

AI researchers already need to make safeguards – say in self-driving vehicles which have the power to kill people as much as any ordinary car – that prevent harm to humans. However, AI is not currently at a place where it could understand the laws, let alone follow them.

“The other big issue with the laws is that we would need a significant advancement in AI for robots to actually be able to follow them,” professor of computing and information systems, Mark Robert Anderson wrote in a piece for the Conversation.

“So far, emulating human behaviour has not been well researched in the field of AI and the development of rational behaviour has focused on limited, well defined areas,” Anderson wrote. “With this in mind, a robot could only operate within a very limited sphere and any rational application of the laws would be highly restricted. Even that might not be possible with current technology, as a system that could reason and make decisions based on the laws would need considerable computational power.”

Here’s hoping someone cracks the problem of how to prevent AI harm to humans long before we find ourselves in that field of skulls.

Source Link: No, People, Asimov's Laws Of Robotics Are Not Actual Laws