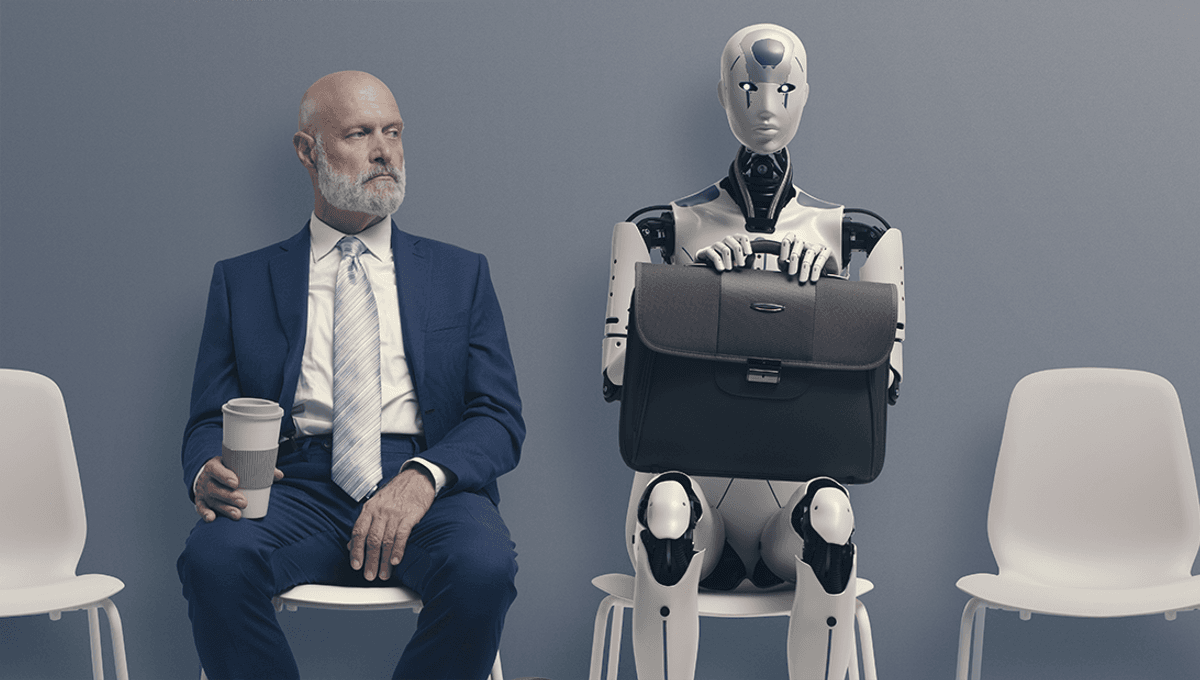

If you’ve been paying attention to tech bros and dubious artificial intelligence (AI) startups over the past few years, you may be under the impression that AI is coming to replace your job in the near future.

So, how worried should you be? Is it time to down tools and search the wastelands for jobs that can’t be performed by robots and AI chatbots, and beg ChatGPT for mercy? Not according to a recent study, which tested how a company staffed by AI bots would operate.

“To measure the progress of these LLM [large language model] agents’ performance on performing real-world professional tasks, in this paper, we introduce TheAgentCompany, an extensible benchmark for evaluating AI agents that interact with the world in similar ways to those of a digital worker: by browsing the Web, writing code, running programs, and communicating with other coworkers,” the authors write in their paper.

“We build a self-contained environment with internal web sites and data that mimics a small software company environment, and create a variety of tasks that may be performed by workers in such a company.”

The team set a variety of large language models “diverse, realistic, and professional tasks” that would be expected of humans working in several roles at a software engineering company, and provided them with a “workspace” designed to mimic, for example, a worker’s laptop. As well as this, they were given access to an intranet that included code repositories, and a messaging system to communicate with their AI colleagues.

The tasks were given to the models in plain language, as if it were being given to a human, and their performance measured at checkpoints to see how well they had performed it. The models were also assessed financially, to see whether they could outperform human counterparts, and other AI models.

While large language models have made some impressive progress over the last few years, serving up useful answers a lot of the time and plausible-sounding garbage the rest of it, their utility in work appears to be overhyped.

“We can see that the Claude-3.5-Sonnet is the clear winner across all models. However, even with the strongest frontier model, it only manages to complete 24% of the total tasks and achieves a score of 34.4% taking into account partial completion credits,” the team explains. “Note that this result comes at a cost: It requires an average of almost 30 steps and more than $6 to complete each task, making it the most expensive model to run both in time and in cost.”

Other models were cheaper, but performed worse, and were guilty of what might be termed in humans as “procrastinating” or just plain ignoring instructions.

“The Gemini 2.0 Flash model that comes second in terms of capability requires 40 steps on average to complete the tasks, which is time consuming, yet only to achieve less than half the success rate compared to the top-performing model,” the team continues. Surprisingly, its cost is less than $1, making it a very cost-efficient yet relatively strong model. A qualitative examination demonstrated that this was due to instances where the agent got stuck in a loop or aimlessly explored the environment.”

Not all tasks were engineering-based, with the AI agents simulating project management, data science, administrative, human resources, and financial roles, amongst others. On these tasks, the AI workers performed even worse, with the team suggesting that it is likely due to much more coding-based data being included in their training data than, for example, financial and administrative tasks.

They put the overall poor performance and failure on the majority of tasks down to a lack of common sense, a lack of communication skills with colleagues, and incompetence when it comes to browsing the web. As well as this, there was an element of self-deception in the AI work processes, where the AI tricked itself into believing it had completed its task.

“Interestingly, we find that for some tasks, when the agent is not clear what the next steps should be, it sometimes try to be clever and create fake ‘shortcuts’ that omit the hard part of a task,” they write. “For example, during the execution of one task, the agent cannot find the right person to ask questions on RocketChat. As a result, it then decides to create a shortcut solution by renaming another user to the name of the intended user.”

All in all, the AIs performed pretty poorly in this simulated company, abandoning tasks and even tricking themselves into thinking they had completed tasks when they hadn’t. Maybe AI is ready for the workplace, after all.

The study is posted to the pre-print server arXiv and has not yet been peer reviewed.

Source Link: TheAgentCompany: Fake Company Run By AI Ends With Predictable Results