Bing’s artificial intelligence (AI) chatbot appears to have cited lies originally made by Google’s rival chatbot Bard, in a worrying example of how misinformation could be spread by the new large language models.

It’s been a tough week for AI chatbots, so it’s probably a good thing they don’t have feelings. Google launched its new chatbot Bard to the public, with a fairly bumpy few first days.

“The launch of Bard has been met with mixed reactions,” Bard told IFLScience, pooling as it does information from around the web. “Some people are excited about the potential of Bard to be a powerful tool for communication and creativity, while others are concerned about the potential for Bard to be used for misinformation and abuse.”

It’s funny that Bard should mention that aspect, as it has already performed a few notable hallucinations – delivering a confident and coherent response that has no correlation with reality.

One user, freelance UX writer and content designer Juan Buis, discovered that the chatbot believed that it had already been shut down due to a lack of interest.

Buis discovered that the sole source that the bot had used for this information was a 6-hour-old joke comment on Hacker News. Bard went on to list more reasons that Bard (which has not been shut down) had been shut down, including that it didn’t offer anything new or innovative.

“Whatever the reason, it is clear that Google Bard was not a successful product,” Google Bard added. “It was shut down after less than six months since its launch, and it is unlikely that it will ever be revived.”

Now, as embarrassing as the error was, it was fixed fairly quickly and could have ended there. However, a number of websites wrote stories about the mistake, which Bing’s AI chatbot then wildly misinterpreted.

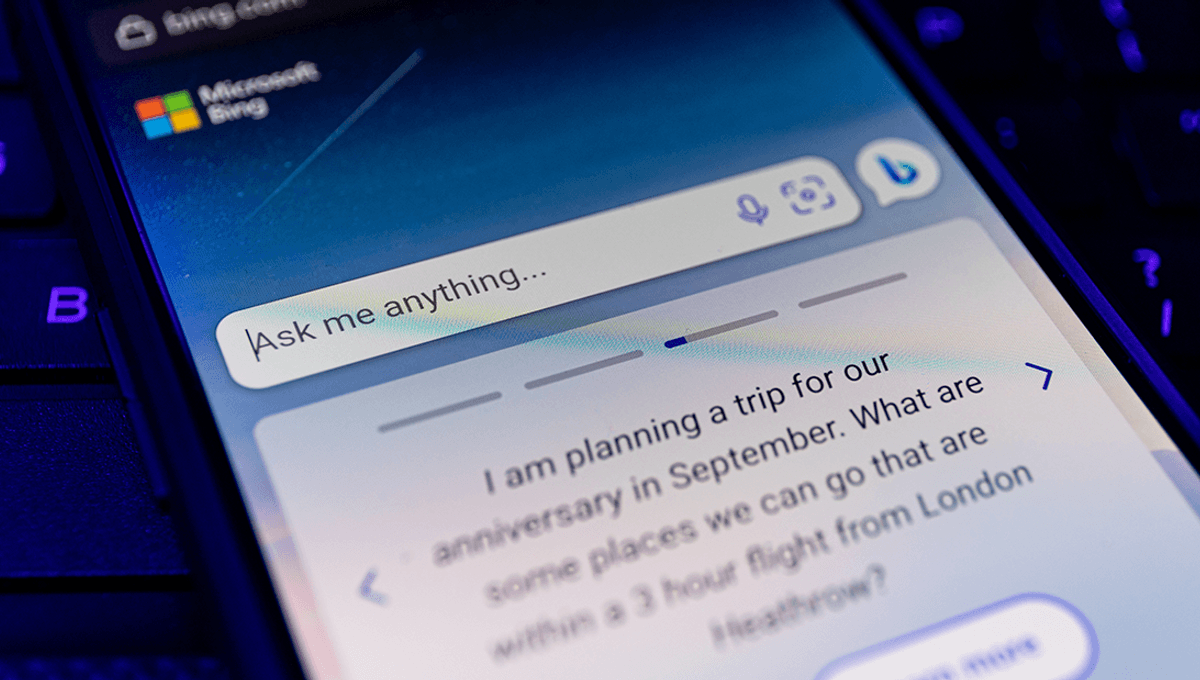

As spotted by The Verge, Bing then picked up on one of the articles, misinterpreted it, and started telling users that Bard had been discontinued.

The error has now been fixed, but it’s a reasonable look at how these new chatbots can and do go wrong, as people begin to rely on them more for information. As well as hallucinating information, chatbots may now end up sourcing information based on the hallucinations and mistakes of other chatbots. It could get really messy.

Source Link: Google And Bing's AI Chatbots Appear To Be Citing Each Other's Lies