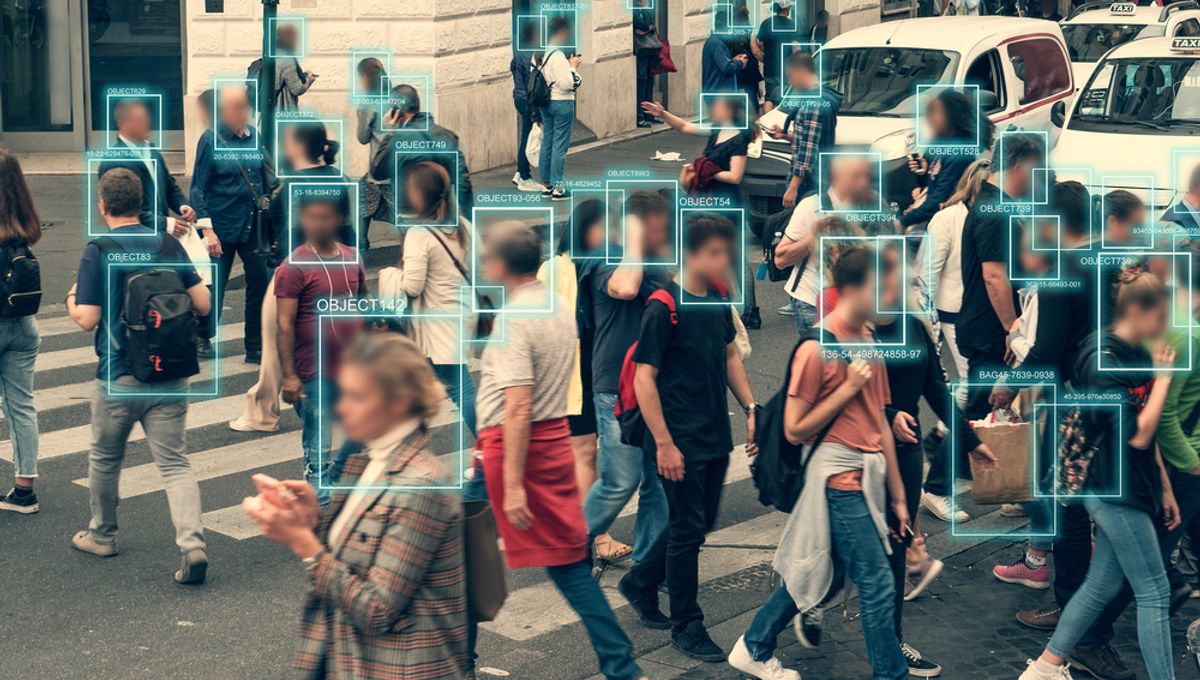

A new AI that is designed to predict crime before it happens has now been tested in multiple US cities, accurately predicting the events around 80-90 percent of the time. The AI is supposedly designed for policy optimization and resource allocation to the areas of a city that need it most, but concerns are rife about the poor track record of AI and its inherent biases.

Now, the creator has sat down with BBC Science Focus in an interview. And he had a lot to say. Including explaining why he believes the deployment of his AI would be a good thing.

In his latest paper, published to Nature Human Behaviour, Professor Ishanu Chattopadhyay and colleagues demonstrate a predictive AI model in eight major US cities. The concept is simple: The city of Chicago releases event logs for each crime, including where and when the crime happened, and this data is fed into a machine learning algorithm. Then, the city is separated into 90 square meter (1,000 square foot) chunks and the event logs are combined with the areas to create what the researchers refer to as a “time series”. The AI then uses these time series to predict crimes based on where and when they often happen.

Essentially, the model can say “there will likely be an armed robbery at this specific area on this specific day”, but not who will carry it out. This distinguishes it from other technology that is used to detect crime, such as the AI that we previously reported on that identifies people most likely to be criminals (which was, of course, horrifically racist and flawed).

“People have concerns that this will be used as a tool to put people in jail before they commit crimes. That’s not going to happen, as it doesn’t have any capability to do that. It just predicts an event at a particular location,” Chattopadhyay told BBC Science Focus.

“It doesn’t tell you who is going to commit the event or the exact dynamics or mechanics of the events.”

This brings about one of the most important questions on the subject – in the theme of many other social predictive AI, does this model fall prey to the crippling racist and socio-economic biases that so many before it have done?

According to Chattopadhyay, the method they used to train the model allows it to avoid these biases, as it is simply event logs that are fed into it. There are very few manual inputs, and this is supposedly a good thing.

“We have tried to reduce bias as much as possible. That’s how our model is different from other models that have come before,” he added.

It now remains to be seen if cities will begin using such a model for policy making, or whether they will steer clear based on its dark track record. The researchers seem confident their AI can skirt around these issues, but given the wide scope for abuse that such systems have, many remain skeptical.

Source Link: AI Predicts 90 Percent Of Crime Before It Happens, Creator Argues It Won't Be Misused