You may have heard about an asteroid set to fly near Earth that is the size of 18 platypus, or maybe the one that’s the size of 33 armadillos, or even one the size of 22 tuna fish.

These outlandish comparisons are the invention of Jerusalem Post journalist Aaron Reich (who bills himself as “creator of the giraffe metric”), but real astronomers sometimes measure celestial objects with units that are just as strange.

The idea of a planet that’s 85% the mass of Earth seems straightforward. But what about a pulsar-wind nebula with a brightness of a few milliCrab? That’s where things get weird.

A platypus that is approximately 1/18th of the size of Asteroid 2023 FH7. Image credit: Lukas_Vejrik/Shutterstock.com

Why do astronomers use such strange units?

The basic problem is that lots of things in space are way too big for our familiar units.

Take my whippet Astro, who is 94cm long. Earth’s radius is about 638 million cm, or 7.5 million Astros.

Jupiter’s radius is 11.2 Earths, or 85 million Astros. That number of Astros is a bit ridiculous, which is why we adjust our unit choice to one that makes more sense.

At an even larger scale, consider the star Betelguese: its radius is 83,000 Earths, or 764 times the radius of the Sun. So if we want to talk about how big Betelgeuse is, it’s much more convenient to use the radius of the Sun as our unit, instead of the radius of Earth (or to describe it as 632 billion Astros).

Heavy stuff

If we want to measure how heavy an asteroid is, we could do it with camels – but in space we’re more interested in mass than in weight. Mass is a measure of how much stuff something is made of.

On Earth the weight of an object, like Astro, depends on the mass of Astro and the gravitational force pulling him down to the ground.

We can think of weight in terms of how hard it is to lift an 18kg Astro off the ground. This would be easy to do on Earth, even easier somewhere with lower gravity like the Moon, and much harder somewhere with higher gravity like Jupiter.

On the other hand, Astro’s mass is how much stuff he’s made of – and it’s the same no matter which planet he’s on.

Astronomers use Earth and the Sun as handy units to measure mass. For example, the Andromeda galaxy is approximately three trillion times the mass of the Sun (or 3×1041 – that’s a 3 followed by 41 zeros – Astros).

Astronomical units and parsecs

Astronomers also use comparisons to measure how far apart things are. The Sun and Earth are 149 million kilometres apart, and we give this distance a name: an astronomical unit (AU).

For an even twistier unit of distance, we use the parsec (insert Han Solo Kessel run joke here). Parsec is short for “parallax second”, and if you remember your trigonometry, this is the length of the hypotenuse of a right-angle triangle when the angle is 1 arcsecond (1/3,600 degrees) and the “opposite” side of the triangle is 1 AU.

Parsecs are handy for measuring even bigger distances because 1 parsec = 206,265 AU. For example, the centre of our very own galaxy, the Milky Way, is about 8,000 parsecs away from Earth, or 1.6 million AU.

Magnitudes

If we want to measure how bright something is, astronomical units of measurement get even weirder. In the second century BC, the ancient Greek astronomer Hipparchus looked up at space and gave the brightest stars a value of 1 and the faintest stars a value of 6.

Notice here that a brighter star has a lower number. We call these brightness values “magnitudes”. The Sun has an apparent magnitude of –26!

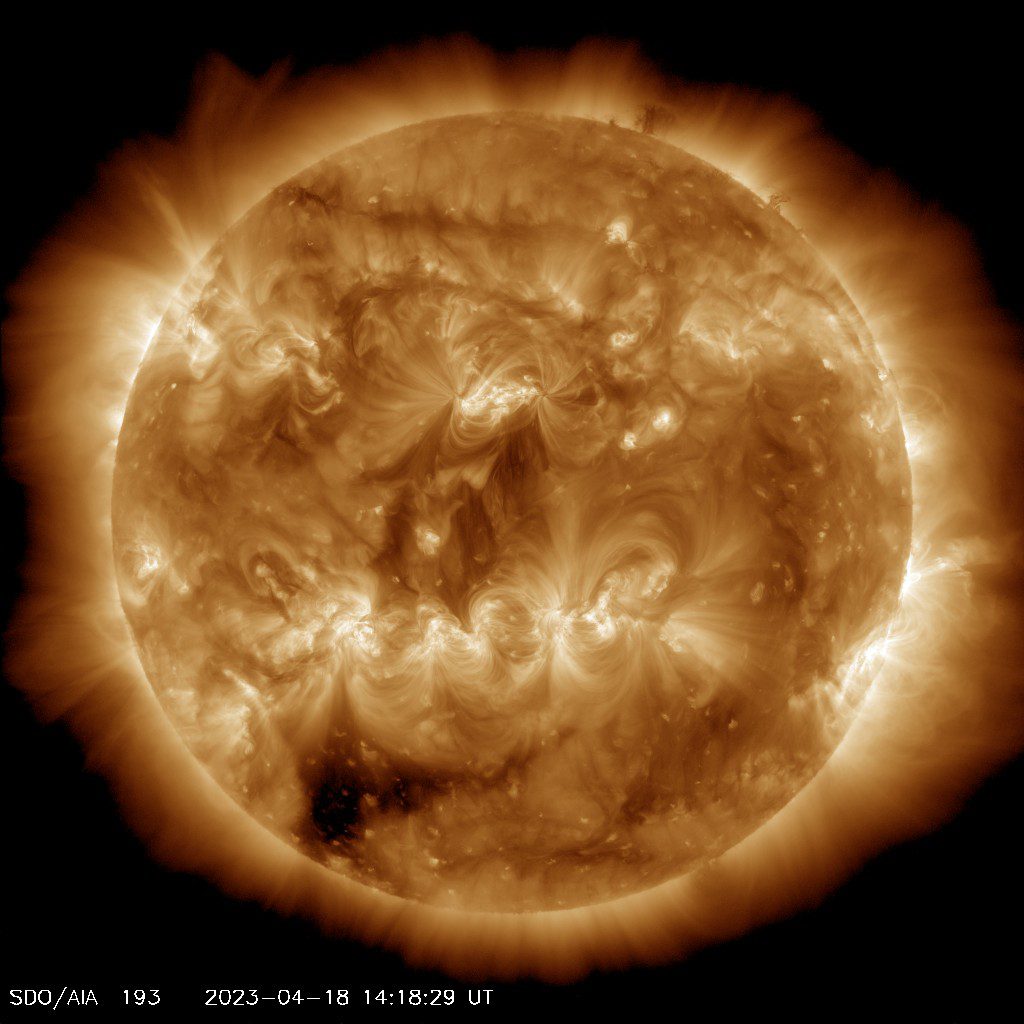

An image of the Sun taken by NASA’s Solar Dynamics Observatory. Image credit: NASA/SDO

Even more confusing than a negative brightness, each single step in magnitude is a 2.512 times difference in brightness. The star Vega has an apparent magnitude of 0, which is two and a bit times brighter than the star Antares with an apparent magnitude of 1.

At last, the milliCrab

The light we see with our eyes is, for obvious reasons, called “visible” light. The light we use to take pictures of your bones is called X-ray light.

When astronomers use X-ray light to observe the sky we sometimes measure brightness in “Crabs”.

The Crab is a rapidly spinning neutron star (or pulsar) in the remains of an exploded star that is extremely bright when we look at it using our X-ray telescopes. It’s so bright in X-ray light that astronomers have been using it to calibrate their telescopes since the 1970s.

Image of the Crab Nebula where red is radio from the Very Large Array, yellow is infra-red from the Spitzer Space Telescope, green is visible from the Hubble Space Telescope, and blue and purple are X-ray from the XMM-Newton and Chandra X-ray Observatories respectively. Image credit: NASA, ESA, G. Dubner (IAFE, CONICET-University of Buenos Aires) et al.; A. Loll et al.; T. Temim et al.; F. Seward et al.; VLA/NRAO/AUI/NSF; Chandra/CXC; Spitzer/JPL-Caltech; XMM-Newton/ESA; and Hubble/STScI

So every X-ray astronomer knows how bright a Crab is. And if we’re talking about a particular object, say a black hole binary system called GX339-4, and it’s only five thousandths as bright as the Crab, we say it’s 5 milliCrab bright.

But buyer beware! The brightness of the Crab is different depending on what energy of X-ray light you’re looking at, and it also changes over time.

Whether we use lions or tigers or Crabs, astronomers make sure to define the units we’re using. There’s no use using an armadillo, or even your local whippet, unless you’ve made sure the definition is clear.![]()

Laura Nicole Driessen, Postdoctoral researcher in radio astronomy, University of Sydney

This article is republished from The Conversation under a Creative Commons license. Read the original article.

Source Link: From Platypus To Parsecs And Millicrab: Why Do Astronomers Use Such Weird Units?